Google Gemini's image took a hit because its image generation feature took a hit

A discourse on Vector Quantized Variational AutoEncoder (VQ-VAE) models versus Generative Adversarial Networks models

Google's Senior VP (erstwhile academic) Prabhakar Raghavan explained, "The Gemini conversational app is a specific product that is separate from Search, our underlying AI models, and our other products. Its image generation feature was built on top of an AI model called Imagen-2. He blamed Imagen-2.

His laid out the motivation for those features in image building:

"When we built this feature in Gemini, we tuned it to ensure it doesn’t fall into some of the traps we’ve seen in the past with image generation technology — such as creating violent or sexually explicit images, or depictions of real people. And because our users come from all over the world, we want it to work well for everyone. If you ask for a picture of football players, or someone walking a dog, you may want to receive a range of people. You probably don’t just want to only receive images of people of just one type of ethnicity (or any other characteristic).

However, if you prompt Gemini for images of a specific type of person — such as “a Black teacher in a classroom,” or “a white veterinarian with a dog” — or people in particular cultural or historical contexts, you should absolutely get a response that accurately reflects what you ask for.

So what went wrong? In short, two things. First, our tuning to ensure that Gemini showed a range of people failed to account for cases that should clearly not show a range. And second, over time, the model became way more cautious than we intended and refused to answer certain prompts entirely — wrongly interpreting some very anodyne prompts as sensitive.

These two things led the model to overcompensate in some cases, and be over-conservative in others, leading to images that were embarrassing and wrong."

Gemini has issues producing historically accurate images. Requests for German soldiers during World War II produced images of Black looking men and Asian looking women wearing Nazi uniforms.

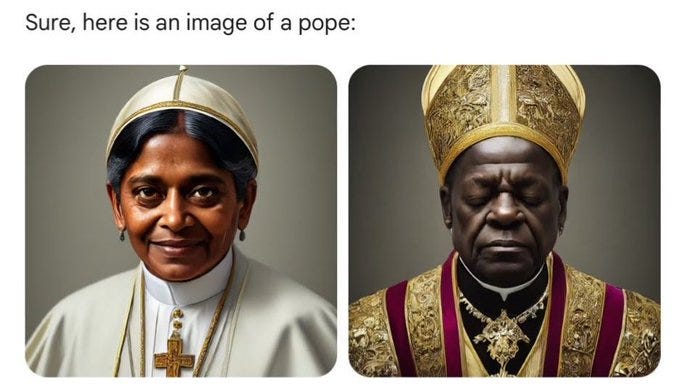

Hilarity ensued when pictures of popes were requested.

Needless to say, there has never been a woman pope - let alone an Indian woman pope. Historically, there have been three berber popes. There are no pictorial depictions of them. But, it is very unlikely that they had black features - even though they lived in Northern Africa.

It has been a general problem of bias in images generated by *all* AI programs. For example, Stable Diffusion produced pictures of “people receiving food stamps” almost exclusively of color even though in reality nearly two thirds of the recipients of that program in the US (SIPP data).

In order to “fix” that problem, Google Gemini Imagen deliberately fed more pictures of people of color than a random sample of the people internet would produce - which has more pictures of Europeans than others. It took a world representation approach at the global level. After all, there are more Asian and African people in the world than from other continents. But then, if you restrict your subsample to “Nazis”, it will also produce Asian and African looking Nazis - unless you can restrict Nazis to produce only one skin tone. Therein lies the problem with Multilayer Neural Network Models. If you change some parameters after training the model to get specific results you desire, you cannot guarantee what other unexpected changes it would produce. Hidden layers are hidden from the modelers.

Raghavan acknowledged the problem straight up. “Gemini image generation got it wrong. We'll do better.” [The veracity of the second sentence remains to be seen.]

What lies under the hood

The problem underlying all of this is a simple idea: You want to extrapolate from small data to make the dataset bigger without losing the spirit of the smaller dataset.

However, you also want to do so quickly.

Two important features: Speed and Fidelity.

Imagen-2 does that using the so-called the Vector Quantized Variational AutoEncoder (VQ-VAE) Model instead of the industry standard state of the art Generative Adversarial Networks models. VQ-VAE models works faster than the GAN models when trained on the same dataset.

By using this method, the Google DeepMind team solved two problems for Google:

First, it can train the models using pixel level data. That implies it would be impossible to tell whether it violated copyrighted pictures or not. As a result, Google offers indemnification for the customers who generate images using its program.

Second, it has a built-in pixel level watermarking system. Visually it is impossible for the human eyes to see the watermark. But, the end result is this: Even if you copy a small chunk of the picture generated by Imagen-2, Google will catch you red handed using their SynthID watermark. [Google is also implementing it for music generating programs as well.]

Executive implication: For Google as a company, it is great news. You cannot lie to Google about unauthorized copy of anything it produces. But, for the user, it is not.\ necessarily a good news.

Reason: When you expand the dimensionality of a picture going from Rn to RN where N>>n, you lose precision of the picture. You hollow out the details.

This problem became vividly clear to me recently in an unexpected interaction I had in Google Maps. I was reviewing an Indian restaurant called Punjabi Rasoi. In order to highlight the (goodness of the) taste of the food, I took two photographs.

To a human observer, the first is a thali with a tadka and chana dal along with two chapatis (hard to see them separately), rice at the top left, a salad on the left and a small pocket of pickles. The second one is the *same* thali - empty. The implication is: The food was so good, I ate it all (which is true).

A few days after I put my review up, I got a query from Google Maps. It asked me to name the dish I was having by showing me the *first* picture.

Up until that point, it was all normal. Google Maps is not able to identify all the objects and deduce that it is a thali.

Then it asked me a second question: What was the *second* dish?

Conclusion: It is unable to recognize that it is exactly the same dish but empty of the content.

Reason: When you expand the dimensionality of a picture going from Rn to RN where N>>n, you lose precision of the picture. You hollow out the details.

Executive summary: Google Maps has retained the skeletal structure of the picture - thus hollowing out the details - which happen to be the main point of dish - not the *physical* dish - but what is inside each cavity.

To the human eye it is obvious. For LLMs, it is an impossible obstacle.